Instrumentation

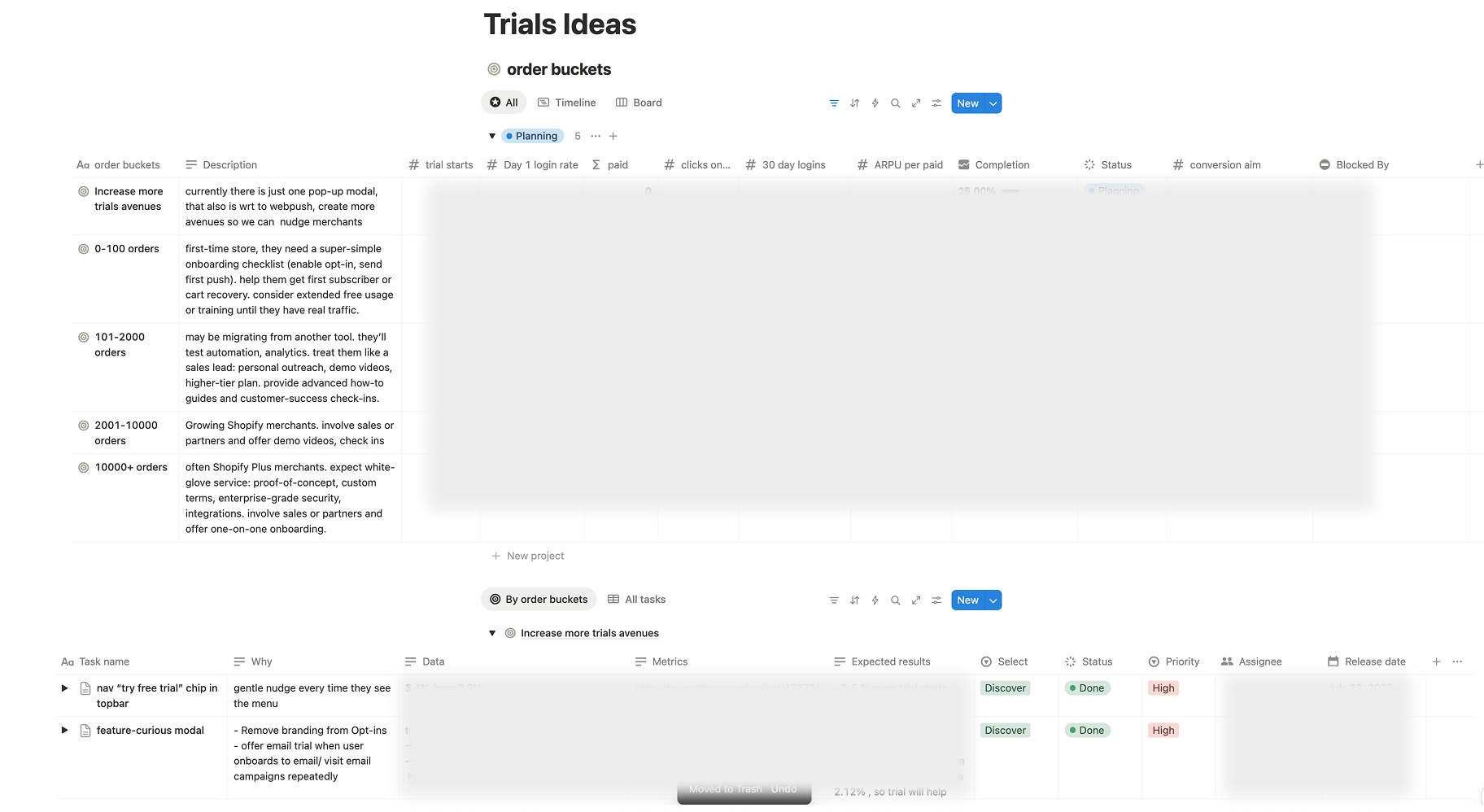

Segment trials by order bucket × UTM × offer type (primary, fallback, AI, managed) × surface (modal, topbar, feature, pricing, seasonal).

Simplified onboarding that reduces confusion, ships a first win, and builds trust across email, SMS, and push.

Order-aware offers, AI creatives, and a safety-net trial system.

The redesigned trial system didn't just look better—it performed better. By removing blank-page fear and driving urgency, we unlocked higher-value conversions.

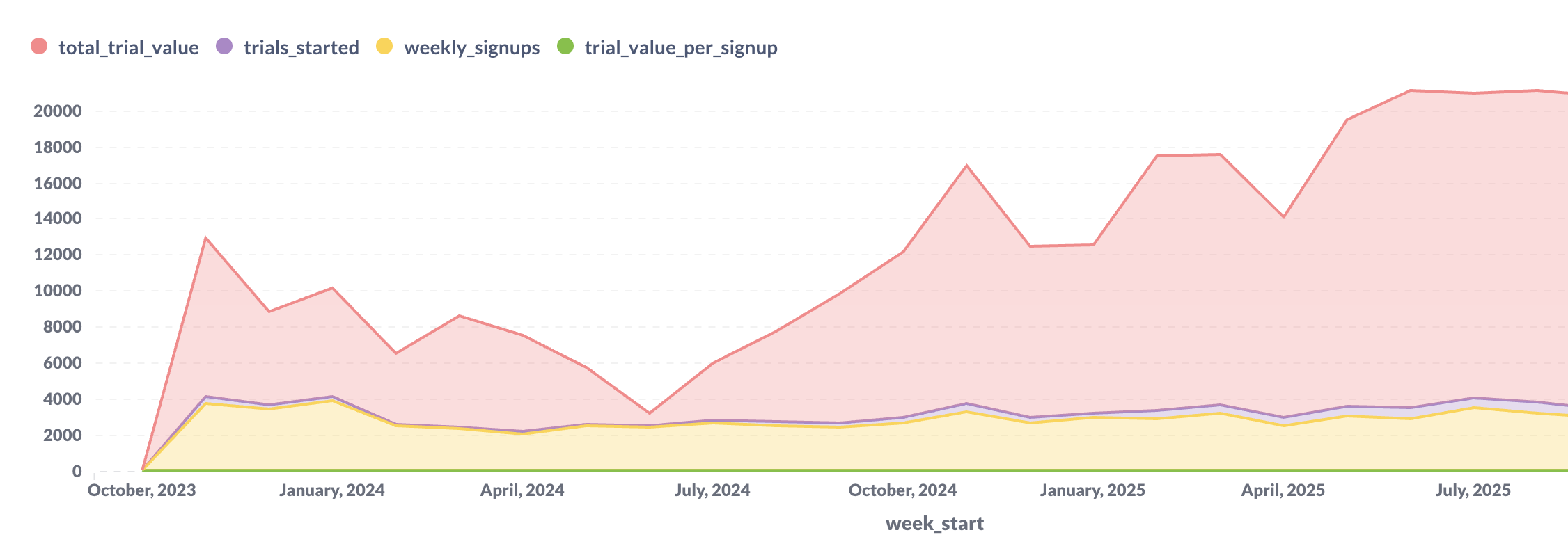

Revenue Lift

Segmented offers and AI creatives unlocked higher-value conversions.

Day-2 Campaign Sends

AI templates successfully removed the "blank-page fear" for new users.

Managed Setup ARPU

Capacity signals drove urgency for high-ARPU segments.

Top-Funnel Lift

More qualified trial starts generated through clearer messaging.

Conversion Rate

Significant improvement in trial-to-paid upgrade metrics.

I rebuilt PushOwl’s PLG surface so merchants see the finished experience first—the UI shows the right plan, AI help, or people-led path before we ever talk pricing.

PushOwl is a Shopify app (now part of Brevo) that helps e-commerce stores send push notifications, email, and SMS campaigns. Merchants install it, connect their store, and use it to recover abandoned carts, announce sales, and re-engage customers.

Despite steady Shopify app store traffic, product-driven revenue growth had plateaued. Trial starts were flat, activation rates were low, and refund requests were climbing.

"Merchants weren't afraid of price, they were afraid of wasting time."

Fix: Trial → Activation → Conversion

Increase activation and paid conversion while maintaining trial quality, trust, and reducing refund anxiety.

Success metrics: Trial starts ↑, time-to-first-campaign ↓, trial value per signup steady, refund questions ↓

The core hypothesis: different store sizes have different needs, fears, and time constraints. A one-size-fits-all trial was leaving revenue on the table.

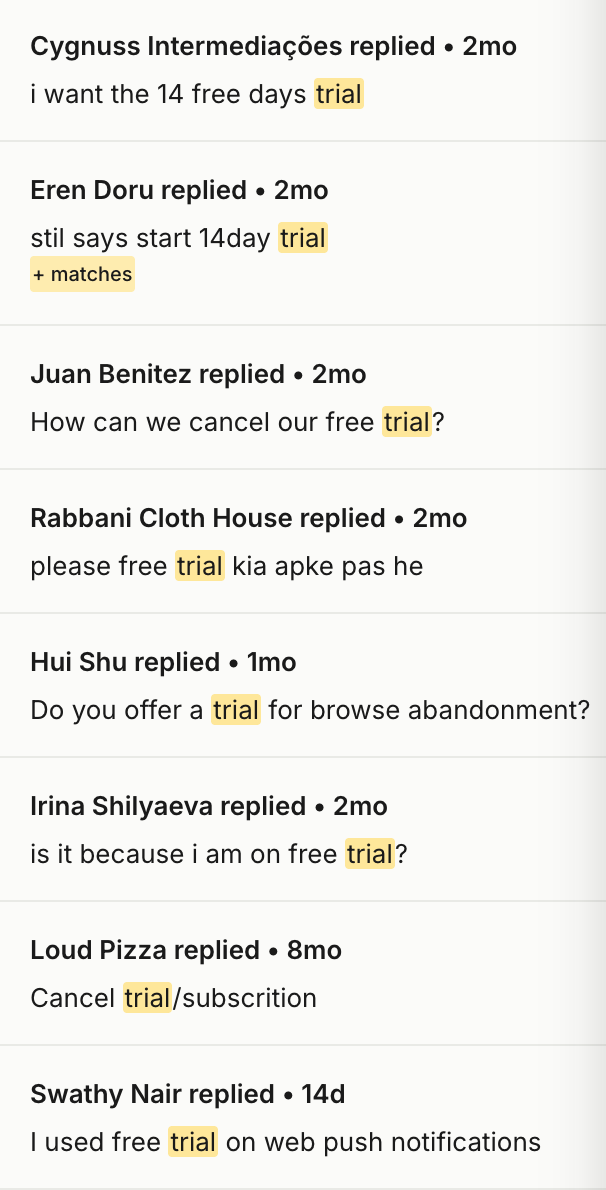

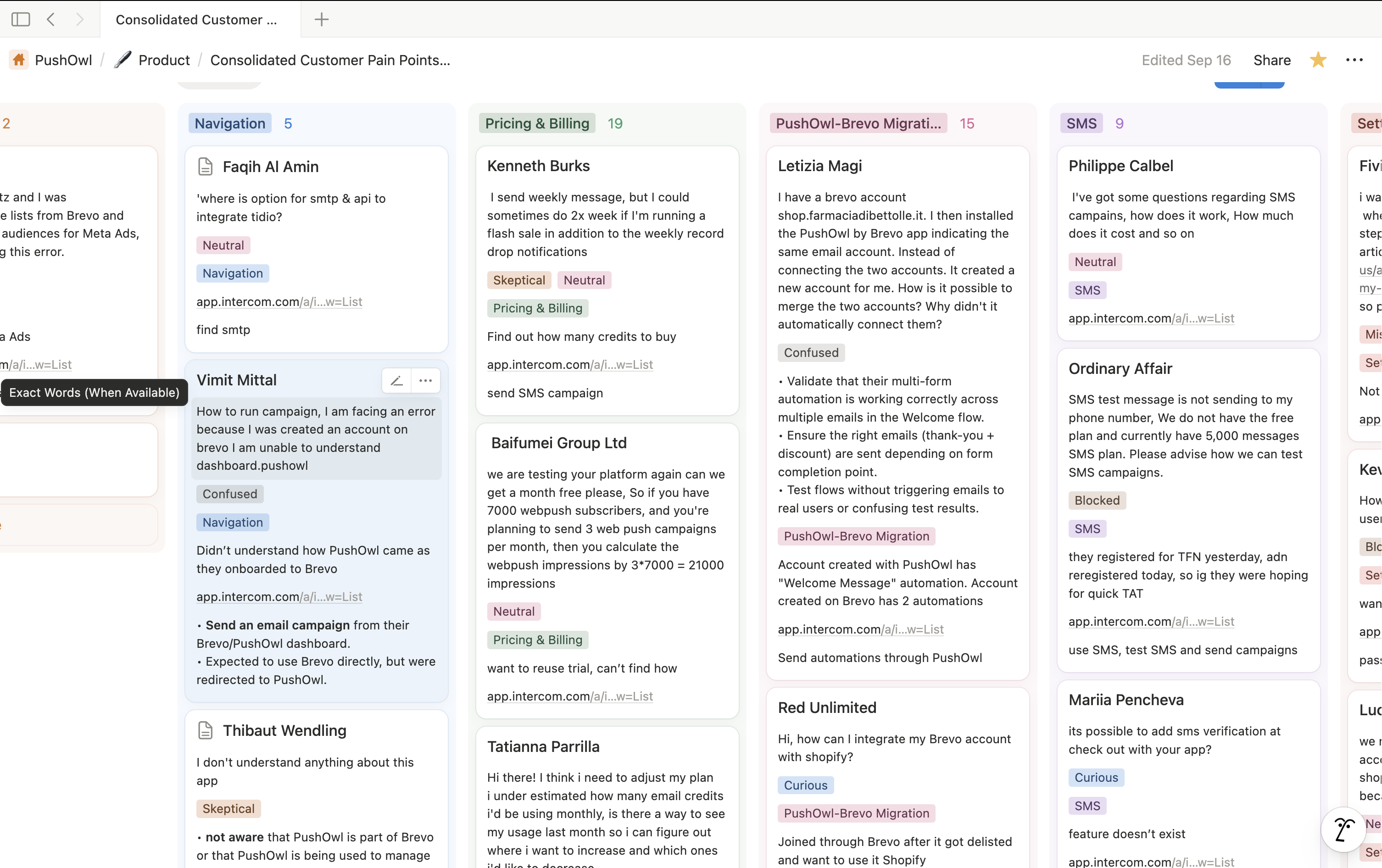

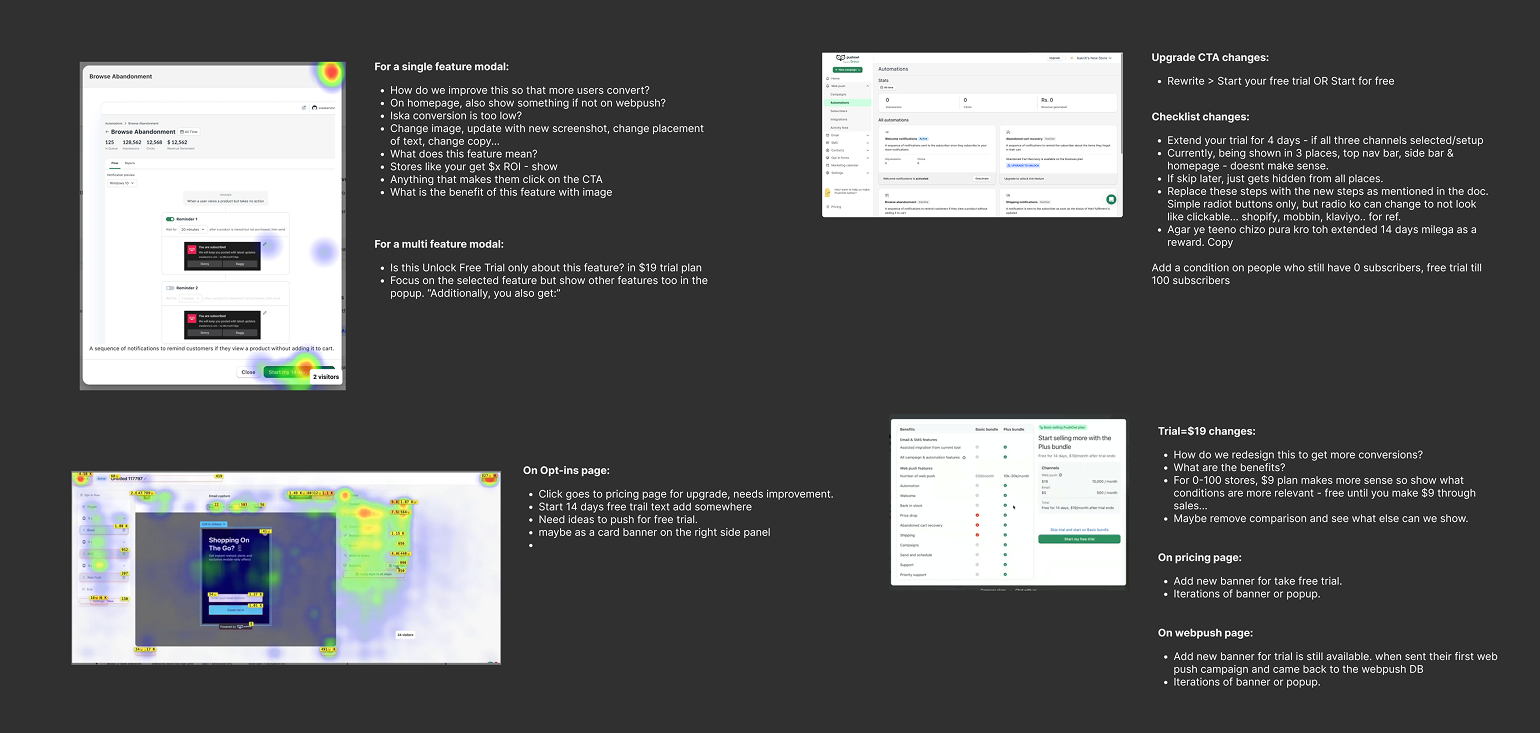

Before designing solutions, I spent 3 weeks analyzing Intercom data, support tickets, PostHog funnels, and Metabase cohorts to understand exactly where and why trials were failing.

I installed the trial but I'm scared to get charged if I forget to cancel...

The pricing changed after I signed up? Or did I miss something?

Does the trial include all features or just basic ones?

I sent one campaign but now it's asking me to upgrade?

What happens if I go over my order limit?

Key finding: 60% of trial users never sent a campaign. The biggest drop-off happened between "trial started" and "first campaign created" - not at pricing.

Key insight: A 50-order Shopify store and a 10,000-order brand have completely different needs, fears, and time constraints. Treating them the same = lost revenue.

Every touchpoint reminds users they stay in control while we chase a first win.

Charged only after 14 days through Shopify. Cancel anytime - link visible on every page.

Cancel in two clicks with confirmation before billing.

Frequency caps, subscriber limits, and review-before-send built in.

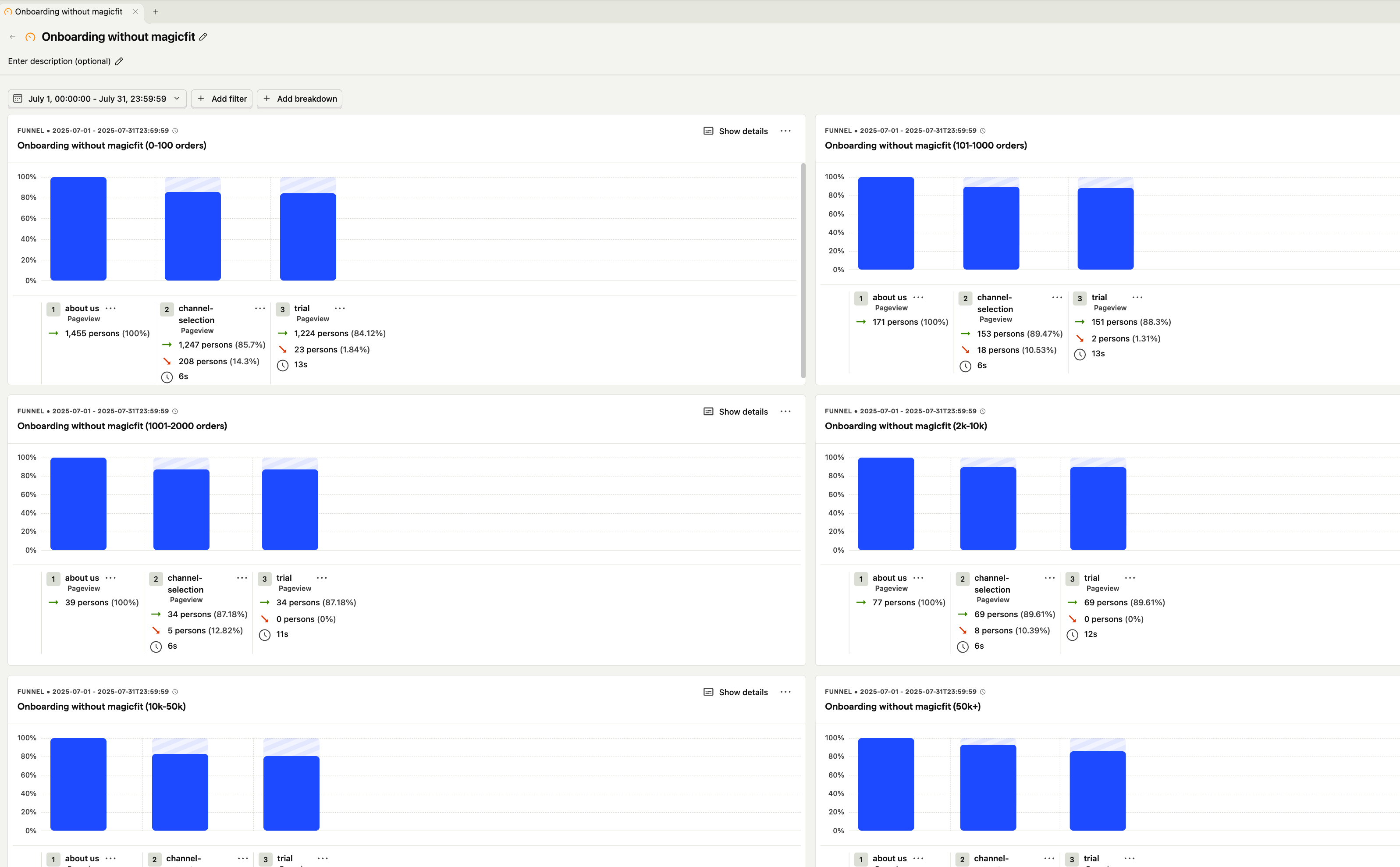

The data showed clear patterns: stores with different order volumes had completely different behaviors, needs, and price sensitivity. A 50-order store starting out needed hand-holding and quick wins. A 10,000-order brand needed efficiency and outcomes.

After analyzing Metabase cohorts by various dimensions (store age, traffic, revenue, category), Shopify order count emerged as the strongest predictor of trial behavior and conversion patterns. It correlated with:

Profile: New stores, first-time founders

Behavior: Highest drop-off, blank-page paralysis

Need: Immediate value, AI templates, hand-holding

Profile: Growing stores, price-conscious

Behavior: Trial-curious but hesitant

Need: Clear trial terms, fallback options

Profile: Established stores, feature-focused

Behavior: Explore features before committing

Need: AI capabilities, self-serve with hints

Profile: Enterprise brands, time-poor

Behavior: Want outcomes, not knobs

Need: Managed setup, tight SLA, premium tier

Cut-offs chosen based on conversion rate inflection points in Metabase data

Based on discovery findings, I formed three testable hypotheses. Each became a distinct offer in the system:

Hypothesis: Pre-filled campaign templates with AI-generated copy, images, and safe defaults will increase Day-2 campaign sends and reduce drop-off in the 0-100 order segment.

Measure: % of trials sending first campaign within 48 hours

Hypothesis: Routing each order bucket to a tailored offer (AI bundle for small stores, managed setup for large stores) will increase trial starts without degrading trial quality.

Measure: Trial starts by cohort, trial value per signup

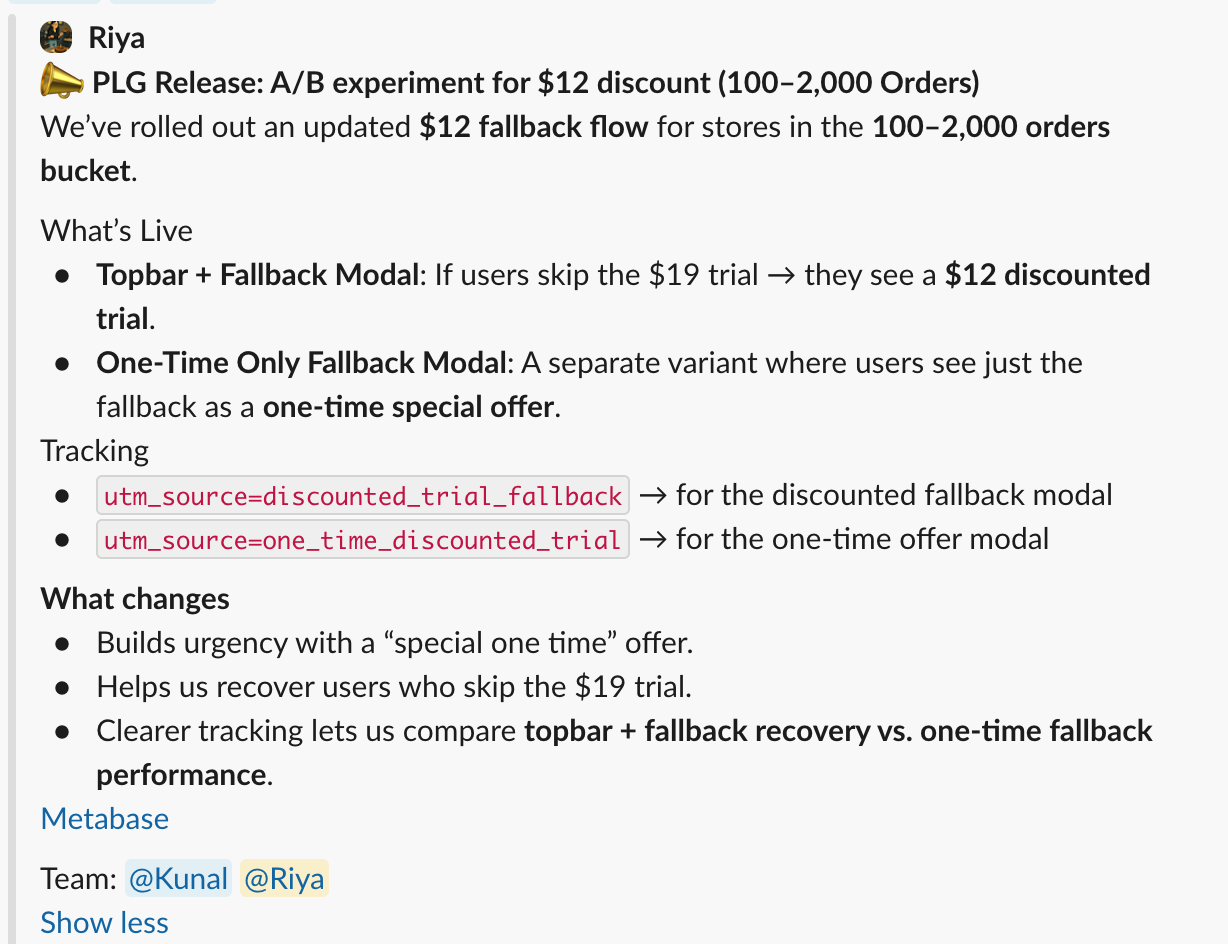

Hypothesis: Offering a discounted trial only after stall signals (modal closed, no progress after 3 days) will recover hesitant users without conditioning everyone to wait for discounts.

Measure: Conversion rate of stalled users, discount exposure % per cohort

Understanding when users show buying intent allowed us to surface the right offer at the right moment - not too early, not too late.

Signal: User completed their first push notification

Action: Show value receipt ("You reached X subscribers") + upsell to paid plan

Timing: Within 24 hours of send

Signal: User logged in 5+ times, sent 3+ campaigns

Action: In-app modal with plan comparison + "Continue with paid plan"

Timing: Day 10-12 of trial

Signal: Campaign drafted but sitting idle >24 hours

Action: Completion nudge ("You're 90% there") + template suggestions

Timing: 24-48 hours after draft save

Signal: No campaigns sent, low engagement, approaching end of trial

Action: Discounted trial fallback or managed setup teaser

Timing: Day 5+ with no progress

Key insight: Intent isn't binary. We built a spectrum - from high-intent (already shipping campaigns) to stalled (need a nudge or fallback). Each segment got a different intervention at a different time.

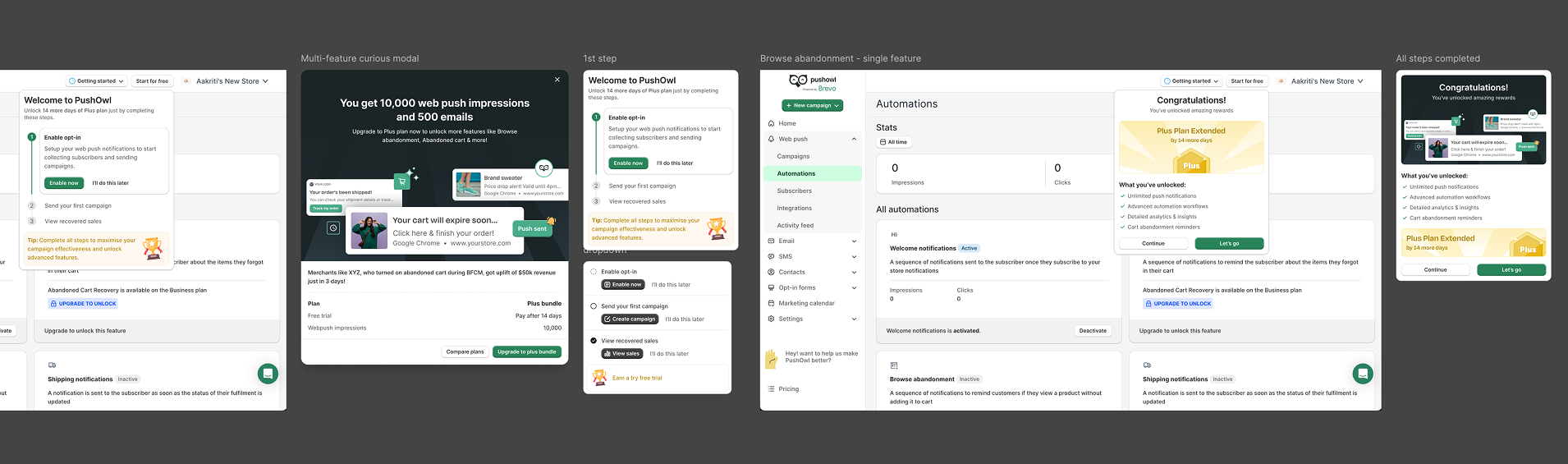

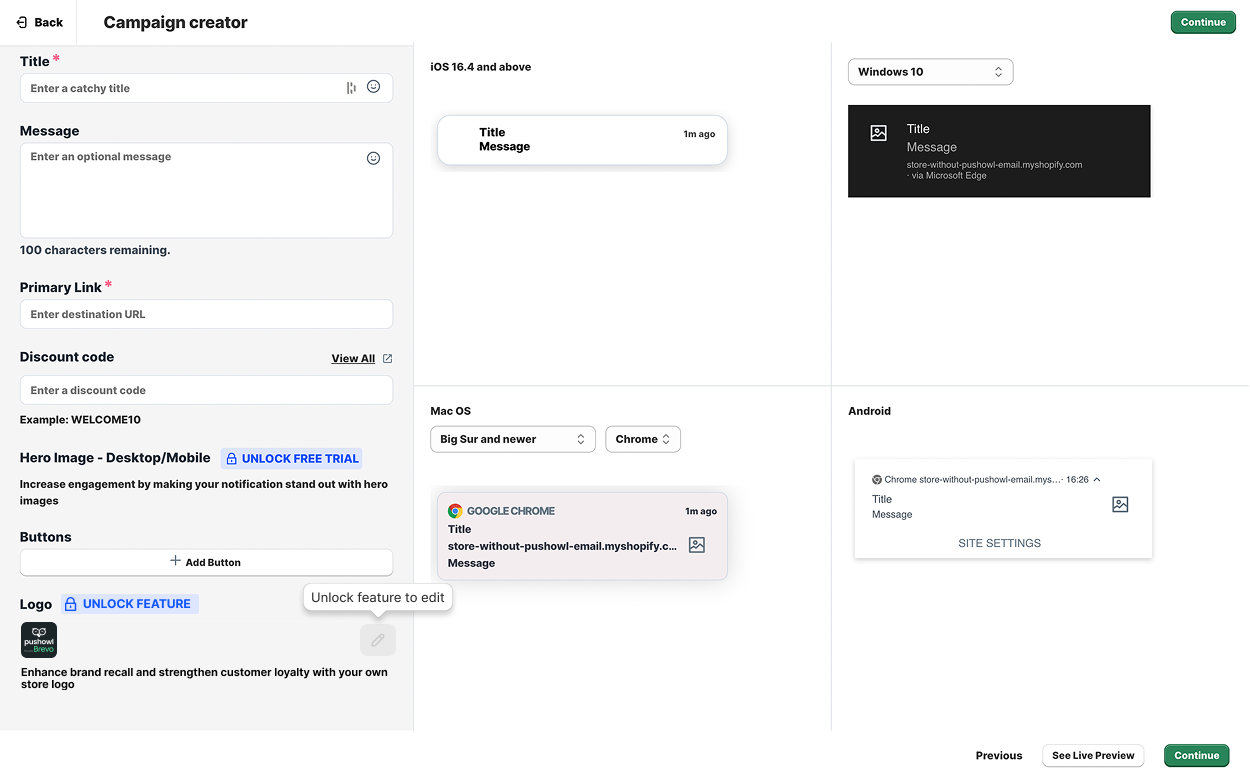

Each surface is a gentle nudge layered on top of the trust work - not a pop-up trap.

The nav chip shifts between default, "discount available," and "resume your draft" so stores can jump back in without hunting.

A lightweight modal appears when someone explores AI creatives or automations without starting the trial, clarifying cost and next step.

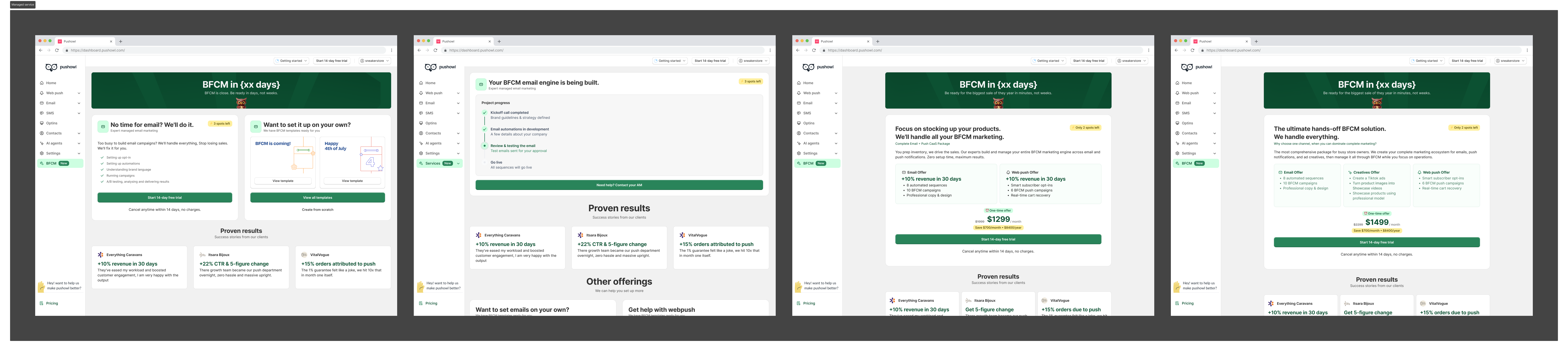

A seasonal tab bundles countdown, AI templates, and the managed card with capacity so "I'll do it later" becomes "I shipped today."

Each hypothesis was tested through controlled experiments. Some validated quickly, others required iteration, and one failed completely - leading to a better approach.

Pre-filled campaign with AI copy, product images, safe send time vs. blank campaign builder

% sending first campaign within 48 hours (0-100 order cohort)

✓ +42% Day-2 sends

What changed: Made AI bundle the primary offer for 0-100 order stores. Added "Edit before sending" safety copy to reduce send anxiety.

Show discounted trial in modal footer immediately vs. only after stall signal

Trial starts, trial value per signup

❌ Trial value dropped 18%

Why it failed:

Showing discount upfront conditioned users to wait. Standard-tier users saw it and hesitated, degrading overall trial quality.

What we changed:

Rolled back. Only surface discount after clear stall signals (modal closed without starting, 3+ days no progress). Cap exposure to once per user per month.

Show "3 slots left this week" capacity indicator vs. generic "Book consultation" CTA

Consultation booking rate (10k+ order cohort)

✓ +67% bookings

What changed: Added dynamic capacity ribbon ("2 slots left") with real team availability. Scarcity drove urgency without feeling manipulative since it was truthful.

Tailored copy per bucket ("Launch your first campaign" for 0-100 vs. "Scale your campaigns" for 10k+) vs. generic copy

Trial start rate by cohort

✓ +19% overall lift

What changed: Created 4 copy variants matched to each segment's maturity level. Small stores saw "first win" language, large stores saw "scale" language.

Key learning: Not all hypotheses validated. The discount timing experiment taught us that visibility matters as much as the offer itself. Showing safety nets too early degraded perceived value.

Four cohorts, one system. Each store gets a primary offer and a secondary path tuned to their reality.

Promise: Launch your first campaign today with AI creatives.

Primary: AI Bundle trial with outcome-first language.

Secondary: Fair trial leading to a discounted fallback if you pause.

Watch: First campaign inside two days, unsubscribe safety, support load.

Promise: Standard trial first, discounted only if you stall.

Primary: Standard trial path.

Secondary: Discounted trial fallback when hesitation shows.

Watch: Trial value per signup, steady revenue quality.

Promise: Self-serve plus AI assist; managed teaser after a win.

Primary: Standard trial with AI creatives surfaced inside "Create."

Secondary: Managed teaser that appears once a first win ships.

Watch: Day-2 ship rate and healthy escalations to managed.

Promise: People-led setup with a tight SLA.

Primary: Managed setup plan near our upper tier.

Secondary: Self-serve trial stays available but not primary.

Watch: Consult bookings, plan upgrades, and same-day time-to-value.

A structured approach: ideate, execute, and analyze to continuously improve the PLG strategy.

This wasn't a solo design effort. I worked closely with engineering, product managers, customer success, and leadership to ship a system that balanced user needs with technical constraints and business goals.

This was a cross-functional effort with Engineering, Product, Customer Success, and Leadership working together on routing logic, success metrics, and phased rollout strategy.

Why phased? Each phase validated key assumptions before expanding scope. If AI templates failed in Phase 1, we'd pivot before building the full routing system. This de-risked the investment and allowed engineering to work incrementally.

Every offer was instrumented by order bucket, surface, and offer type. We tracked trial starts, time-to-first-campaign, trial value per signup, and qualitative support themes.

Trial value per signup remained steady - we grew volume without diluting quality

Segment trials by order bucket × UTM × offer type (primary, fallback, AI, managed) × surface (modal, topbar, feature, pricing, seasonal).

Trial starts moved up meaningfully in the two smallest buckets and more trials shipped a campaign within two days.

Trial value per signup held steady while refund questions dropped after money copy changes.

PostHog for experiments, Metabase for revenue cohorts, Intercom for qualitative tags, and Canny for qualitative themes.

Managed “audit to plan” flow: templatize scope, slot scheduling, and follow-through so ops keep pace with demand.

"PLG isn't about free trials - it's about removing friction until the first success feels inevitable."

A three-offer system (AI creatives, discounted trial fallback, managed setup) routed by order bucket and activated through lightweight nudges made PushOwl trials feel trustworthy and fast to value - lifting trial starts, first wins, and paid conversion without leaking trust or margin.

The insight that mattered most:

Segmentation isn't just about pricing tiers - it's about understanding that different users have different fears, time constraints, and definitions of "value." Treating a 50-order store the same as a 10,000-order brand was leaving money on the table.

The best growth doesn't come from aggressive tactics - it comes from designing systems that help users win faster than they expected.

The redesigned trial system didn't just look better—it performed better. By removing blank-page fear and driving urgency, we unlocked higher-value conversions.

Revenue Lift

Segmented offers and AI creatives unlocked higher-value conversions across all cohorts.

Day-2 Campaign Sends

AI templates successfully removed the "blank-page fear" for new users.

Conversion Rate

Significant improvement in trial-to-paid upgrade metrics.

Top-Funnel Lift

More qualified trial starts generated through clearer messaging and bucket-aware routing.

Trial Value Maintained

We grew volume without diluting quality—trial value per signup remained steady.